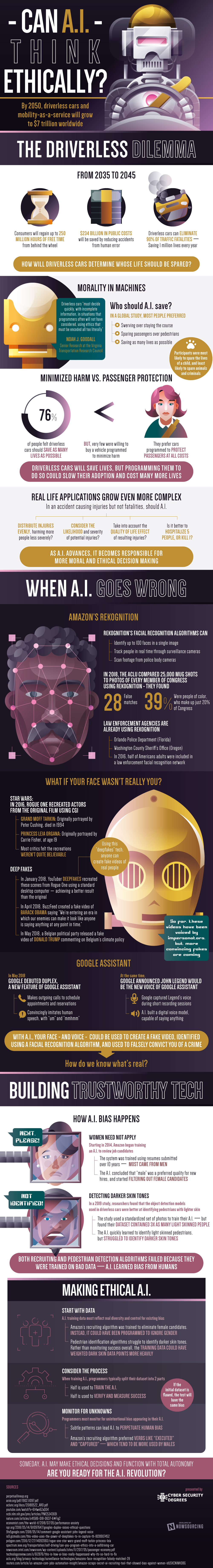

Can AI Think Ethically?

By 2050, driverless cars and mobility-as-a-service will grow to $7 trillion worldwide

The Driverless Dilemma

From 2035 to 2045

– Consumers will regain up to 250 million hours of free time from behind the wheel

– $234 billion in public costs will be saved by reducing accidents from human error

– Driverless cars can eliminate 90% of traffic fatalities ー Saving 1 million lives every year

How will driverless cars determine whose life should be spared?

Morality in Machines

Driverless cars “must decide quickly, with incomplete information, in situations that programmers often will not have considered, using ethics that must be encoded all too literally”

ー Noah J. Goodall, Senior Research at the Virginia Transportation Research Council

Who Should AI Save?

In a global study, most people preferred:

– Swerving over staying the course

– Sparing passengers over pedestrians

– Saving as many lives as possible

Participants were most likely to spare the lives of a child, and least likely to spare animals and criminals

Minimized Harm vs. Passenger Protection

– 76% of people felt driverless cars should save as many lives as possible

BUT, very few were willing to buy a vehicle programmed to minimize harm

– They prefer cars programmed to protect passengers at all costs

– Driverless cars will save lives, but programming them to do so could slow their adoption and cost many more lives

Real Life Applications Grow Even More Complex

– In an accident causing injuries but not fatalities, should AI

distribute injuries evenly, harming more people less severely?

– Consider the likelihood and severity of potential injuries?

– Take into account the quality of life effect of resulting injuries?

Is it better to hospitalize 5 people, or kill 1?

As AI advances, it becomes responsible for more moral and ethical decision making

When AI Goes Wrong

Amazon’s Rekognition

Rekognition’s facial recognition algorithms can:

– Identify up to 100 faces in a single image

– Track people in real time through surveillance cameras

– Scan footage from police body cameras

In 2018, the ACLU compared 25,000 mug shots to photos of every member of Congress using Rekognition 一 they found 28 false matches

– 39% were people of color, who make up just 20% of Congress

Law enforcement agencies are already using Rekognition

– Orlando Police Department (Florida)

– Washington County Sheriff’s Office (Oregon)

In 2016, half of Americans adults were included in a law enforcement facial recognition network

What If Your Face Wasn’t Really You?

Star Wars

In 2016, Rogue One recreated actors from the original film using CGI

– Grand Moff Tarkin: Originally portrayed by Peter Cushing, died in 1994

– Princess Leia Organa: Originally portrayed by Carrie Fisher, at age 19

Most critics felt the recreations weren’t quite believable

Deep Fakes

In January 2018, YouTuber derpfakes recreated these scenes from Rogue One using a standard desktop computer 一 achieving a better result than the original

Using this “deepfakes” tech, anyone can create fake videos of real people

In April 2018, BuzzFeed created a fake video of Barack Obama saying

“We’re entering an era in which our enemies can make it look like anyone is saying anything at any point in time.”

In May 2018, a Belgian political party released a fake video of Donald Trump commenting on Belgium’s climate policy

So far, these videos have been voiced by impersonators, but more convincing fakes are coming

Google Assistant

In May 2018, Google debuted Duplex, a new feature of Google Assistant

– Makes outgoing calls to schedule appointments and reservations

– Convincingly imitates human speech, with “um” and “mmhmm”

– At the same time, Google announced John Legend would be the new voice of Google Assistant

— Google captured Legend’s voice during short recording sessions

AI built a digital voice model, capable of saying anything

With AI, your face 一and voice一 could be used to create a fake video, identified using a facial recognition algorithm, and used to falsely convict you of a crime

How do we know what’s real?

Building Trustworthy Tech

How AI Bias Happens

Women Need Not Apply

– Starting in 2014, Amazon began training an AI to review job candidates

– The system was trained using resumes submitted over 10 years ー Most came from men

– The AI concluded that “male” was a preferred quality for new hires, and started filtering out female candidates

Detecting Darker Skin Tones

– In a 2019 study, researchers found that the object detection models used in driverless cars were better at identifying pedestrians with lighter skin

– The study used a standardized set of photos to train their AI 一 but found their dataset contained 3x as many light skinned people

– The AI quickly learned to identify light skinned pedestrians, but struggled to identify darker skin tones

Both recruiting and pedestrian detection algorithms failed because they were trained on bad data 一 AI learned bias from humans

Making Ethical AI

Start With Data

AI training data must reflect real diversity and control for existing bias

Amazon’s recruiting algorithm was trained to eliminate female candidates

Instead, it could have been programmed to ignore gender

Pedestrian identification algorithms struggle to identify darker skin tones

Rather than monitoring success overall, the training data could have weighted dark skin data points more heavily

Consider The Process

When training AI, programmers typically split their dataset into 2 partsHalf is used to train the AI

Half is used to verify and measure success

If the initial dataset is flawed, the test will have the same bias

Monitor For Unknowns

Programmers must monitor for unintentional bias appearing in their AI

Subtle patterns can lead AI to perpetuate human bias

Amazon’s recruiting algorithm preferred verbs like “executed” and “captured” 一 which tend to be more used by males

Someday, AI may make ethical decisions and function with total autonomy

Are you ready for the AI revolution?

Sources:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5343691/

https://newsroom.intel.com/newsroom/wp-content/uploads/sites/11/2017/05/passenger-economy.pdf

https://www.technologyreview.com/s/612876/this-is-how-ai-bias-really-happensand-why-its-so-hard-to-fix/

https://arxiv.org/pdf/1902.11097.pdf

https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G

https://9to5google.com/2018/05/14/comment-google-assistant-john-legend-voice/

https://www.npr.org/2018/05/14/611097647/googles-duplex-raises-ethical-questions

https://www.youtube.com/watch?v=614we6ZaQ04

https://io9.gizmodo.com/this-video-uses-the-power-of-deepfakes-to-re-capture-th-1828907452

https://www.economist.com/the-world-if/2018/07/05/performance-anxiety

https://spectrum.ieee.org/transportation/self-driving/can-you-program-ethics-into-a-selfdriving-car

https://www.nature.com/articles/s41586-018-0637-6#Fig2

https://www.aclu.org/blog/privacy-technology/surveillance-technologies/amazons-face-recognition-falsely-matched-28

https://www.aclunc.org/docs/20180522_ARD.pdf

https://www.polygon.com/2016/12/27/14092060/rogue-one-star-wars-grand-moff-tarkin-princess-leia

https://www.perpetuallineup.org